Overview

In the previous post, I covered how to upload and synchronize images to a cloud storage bucket (OCI). Now, I’ll walk through implementing the feed registration API, which handles post content and multiple images. Let’s design and implement this feature while considering key requirements and potential edge cases.

Feature Requirements

We need to build a feature to post SNS-style feed content.

To register a post in the app, both images and text must be included. The user can upload up to 10 images before finalizing the post. Once the post is ready, the images stored in a buffer bucket should be moved to a disk bucket, and the originals in the buffer bucket should be deleted.

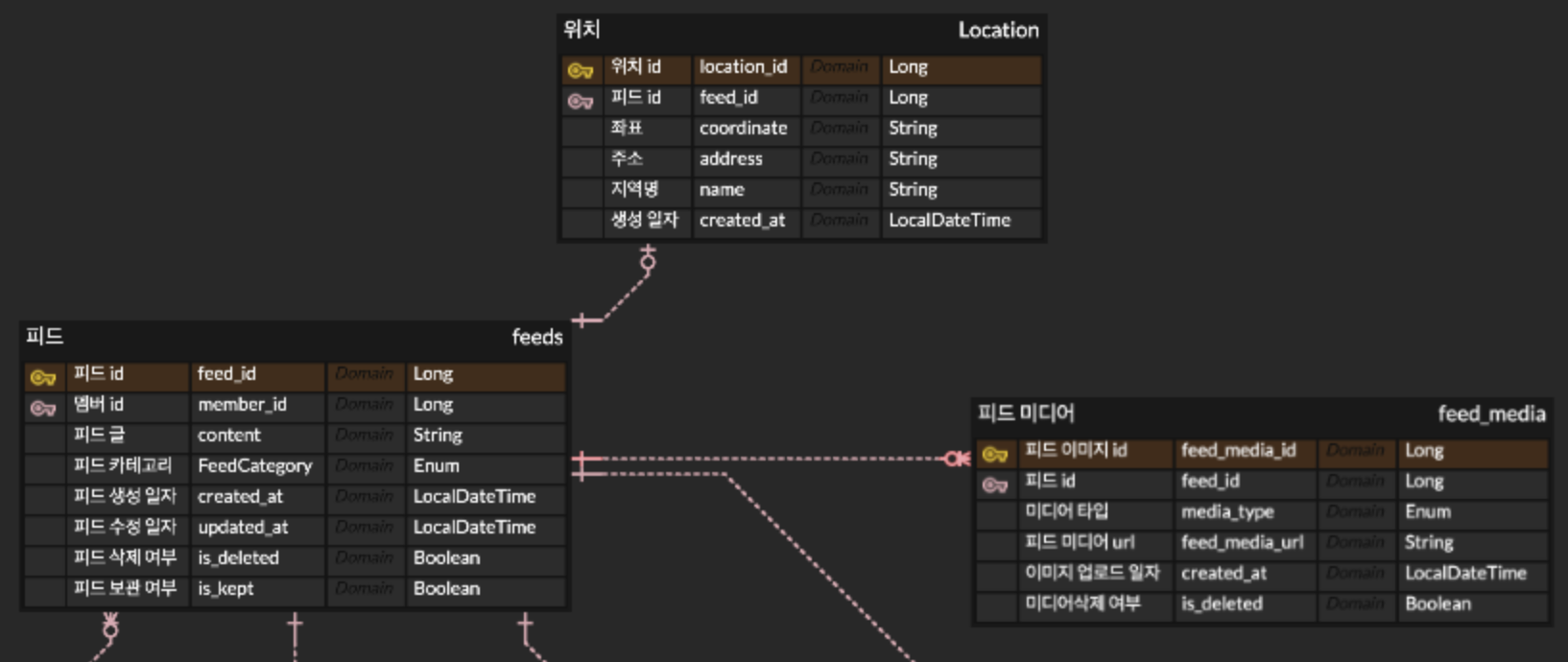

Here’s the current design for the core feed-related entities:

Note that location and media (images) are optional fields when registering a feed. In other words, the system must handle cases where those values are absent.

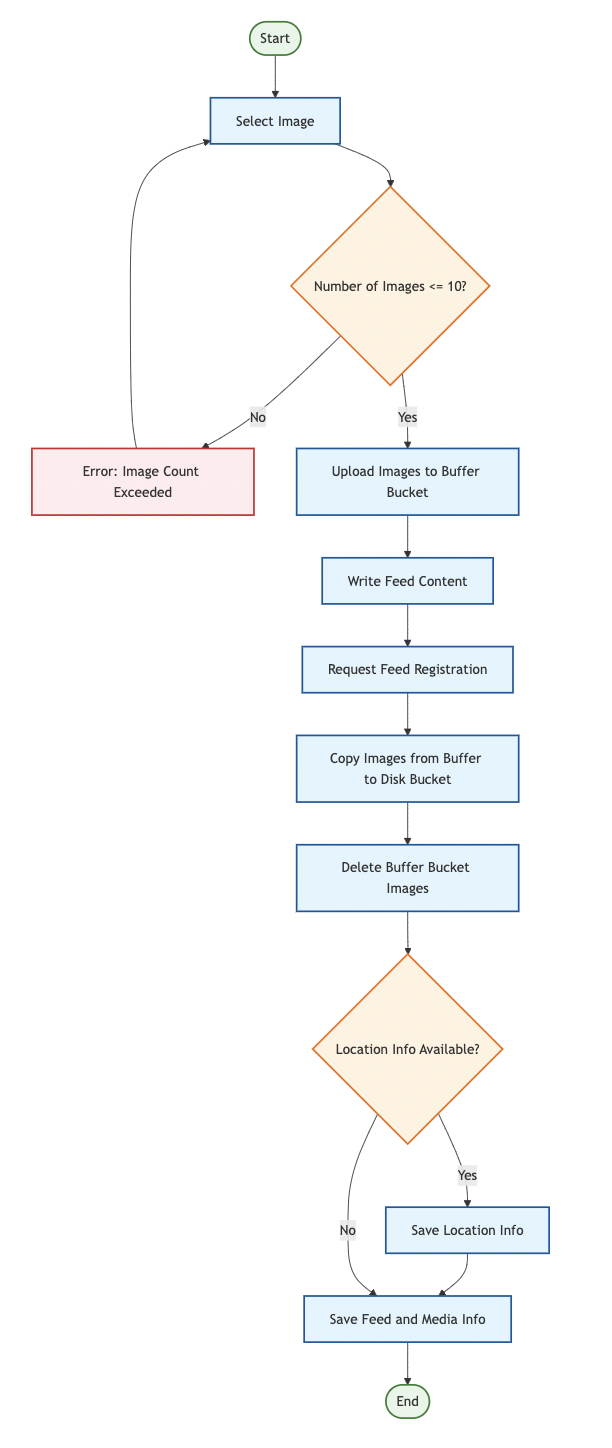

Functional Flow

The requirements are relatively complex, so I modeled the high-level flow to better understand the behavior. Here’s a mermaid-based diagram:

In short:

- Images are uploaded before the post is created.

- The client holds the image URLs, not the server.

- When the post is finalized, those images are moved from the buffer bucket to the disk bucket.

- If location data is present, it is also saved. Otherwise, only feed and feed media are persisted.

Checklist of Features

- Images uploaded to the buffer bucket are not saved in the DB, so we name them using identifiers for easy tracking and log each upload (stored in MongoDB).

- Upload multiple images to the buffer bucket and return the upload result.

- After registering the feed, move the images to the disk bucket and return updated URLs.

- Save the updated image URLs in the feed media entity using JPA.

- If a location exists, also persist the location entity to the DB.

Additional Consideration

- Each image must have an index to maintain order.

Because feed images need to preserve order, an index must be associated with each image. However, since Stream.parallel() processes data in parallel threads, it’s not possible to increment a shared index safely. So I decided to have the client send the index along with the upload request to ensure consistency.

Implementation

Here’s the implementation of the service logic for the checklist above (excluding full controller or repository code for brevity):

@Service

@RequiredArgsConstructor

public class FeedService {

private final FeedRepository feedRepository;

private final FeedMediaRepository feedMediaRepository;

private final MemberRepository memberRepository;

private final LocationRepository locationRepository;

private final ObjectStorageService objectStorageService;

private final MemberService memberService;

@Transactional

public FeedPostResponse registerFeed(List<FileMetadataDto> metadataList, FeedRequest feedRequest) {

MemberDto memberDto = memberService.getMember();

Member member = memberRepository.findById(memberDto.getId()).orElseThrow();

// Save the feed entity

Feed feed = Feed.of(member, feedRequest.getContent(), feedRequest.getCategory(), LocalDateTime.now(), LocalDateTime.now());

feedRepository.save(feed);

// Move images from buffer bucket to disk bucket

List<FeedTempDto> finalizedFeed = metadataList.parallelStream()

.map(metadata -> {

try {

String finalFileName = String.format("%s/%s/%s?%s&%s",

member.getId(),

"feed",

feed.getId(),

"width=" + metadata.getWidth() + "&height=" + metadata.getHeight(),

"index=" + metadata.getIndex());

String diskUrl = objectStorageService.copyToDisk(metadata, finalFileName);

return FeedTempDto.builder()

.url(diskUrl)

.index(metadata.getIndex())

.build();

} catch (BmcException e) {

logger.error("Failed to copy file: {}", metadata.getOriginalFileName(), e);

throw new RuntimeException("Failed to copy file: " + metadata.getOriginalFileName(), e);

}

})

.toList();

// Save media info to DB

finalizedFeed.forEach(feedTempData -> {

FeedMedia feedMedia = FeedMedia.of(feed, "IMAGE", feedTempData.getUrl(), feedTempData.getIndex(), LocalDateTime.now(), LocalDateTime.now());

feedMediaRepository.save(feedMedia);

});

// Save location if it exists

LocationRequest locationRequest = feedRequest.getLocation();

if (locationRequest.getAddress() != null) {

Location location = Location.of(feed, locationRequest.getCoordinate(), locationRequest.getAddress(), locationRequest.getName(), LocalDateTime.now(), LocalDateTime.now());

locationRepository.save(location);

}

return FeedPostResponse.builder()

.id(feed.getId().toString())

.feedTempDtos(finalizedFeed)

.content(feed.getContent())

.build();

}

}

Summary of Key Logic

Retrieve the current member (user):

MemberDto memberDto = memberService.getMember();

Member member = memberRepository.findById(memberDto.getId()).orElseThrow();

Save the feed’s main info:

Feed feed = Feed.of(member, feedRequest.getContent(), feedRequest.getCategory(), LocalDateTime.now(), LocalDateTime.now());

feedRepository.save(feed);

Process the images:

List<FeedTempDto> finalizedFeed = metadataList.parallelStream()

.map(metadata -> {

...

String diskUrl = objectStorageService.copyToDisk(metadata, finalFileName);

...

})

.toList();

Since we’re using parallel streams, I avoided incrementing a shared index and instead had the client specify the order via metadata.

Save feed media:

finalizedFeed.forEach(feedTempData -> {

FeedMedia feedMedia = FeedMedia.of(feed, "IMAGE", feedTempData.getUrl(), feedTempData.getIndex(), LocalDateTime.now(), LocalDateTime.now());

feedMediaRepository.save(feedMedia);

});

Handle optional location info:

if (locationRequest.getAddress() != null) {

Location location = Location.of(feed, locationRequest.getCoordinate(), locationRequest.getAddress(), locationRequest.getName(), LocalDateTime.now(), LocalDateTime.now());

locationRepository.save(location);

}

Return the final response:

return FeedPostResponse.builder()

.id(feed.getId().toString())

.feedTempDtos(finalizedFeed)

.content(feed.getContent())

.build();

Final Thoughts

There are definitely areas in this service that could use a lot of refactoring. But for now, my priority is to complete and test all critical features. Once that’s done, I’ll revisit this and polish the code structure for readability, maintainability, and scalability.

you can see the code in